If you want to create website screenshots using Python here are some popular options:

- Playwright - 2020 release

- Puppeteer - 2017 release - not discussed

- Selenium Webdriver - 2002 release

- Urlbox - since 2012

Sample Code on GitHub showing all the examples in this article

TL;DR

For new projects I will always try Urlbox as rendering is difficult for many websites. Their sandbox mode is great to get good feedback and proxying support for testing.

For new complex projects I favour Playwright over Puppeteer as it has official support for Python. Good discussion

For legacy projects I still support Selenium which is the oldest and most complex to setup. Good discussion

1. Playwright

Playwright is about testing and it's screenshotting is excellent.

.. the needs of end-to-end testing. Playwright supports all modern rendering engines including Chromium, WebKit, and Firefox. Test on Windows, Linux, and macOS, locally or on CI, headless or headed

7.8k stars on GitHub - Python release and last release was on the 4th Jan 2023 ie it is an active project. Interestingly the parent Javascript / Typescript/ Node Project project as 46k stars and there are .NET and Java implementations too

To install follow the docs

# I'm using Python 3.8.10 on Ubuntu 20.04 on Windows WSL for dev, Ubuntu 20.04 for production

# 22.3.1

pip install --upgrade pip

# 1.28.0 is the package version from pip - 1.29.1 is latest on gh repo

pip install pytest-playwright

# installs required browsers

playwright installLet's do the simplest possible thing:

from playwright.sync_api import sync_playwright

with sync_playwright() as p:

browser = p.chromium.launch()

page = browser.new_page()

page.goto("http://playwright.dev")

print(page.title())

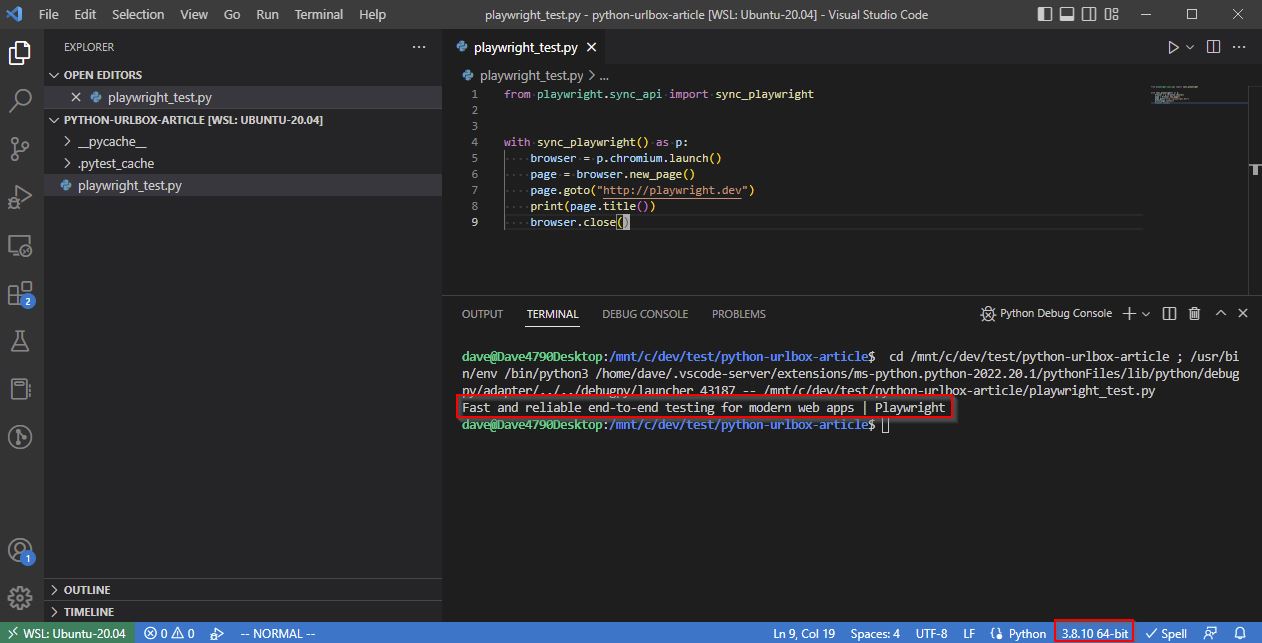

browser.close()The output is shown below (VSCode)

It got the page title and we're running the 3.8.10 Python interpreter.

Screenshots can be done using the page.screenshot function:

from playwright.sync_api import sync_playwright

with sync_playwright() as p:

browser = p.chromium.launch()

page = browser.new_page()

page.goto("http://playwright.dev")

# print(page.title())

page.screenshot(path="screenshot.png")

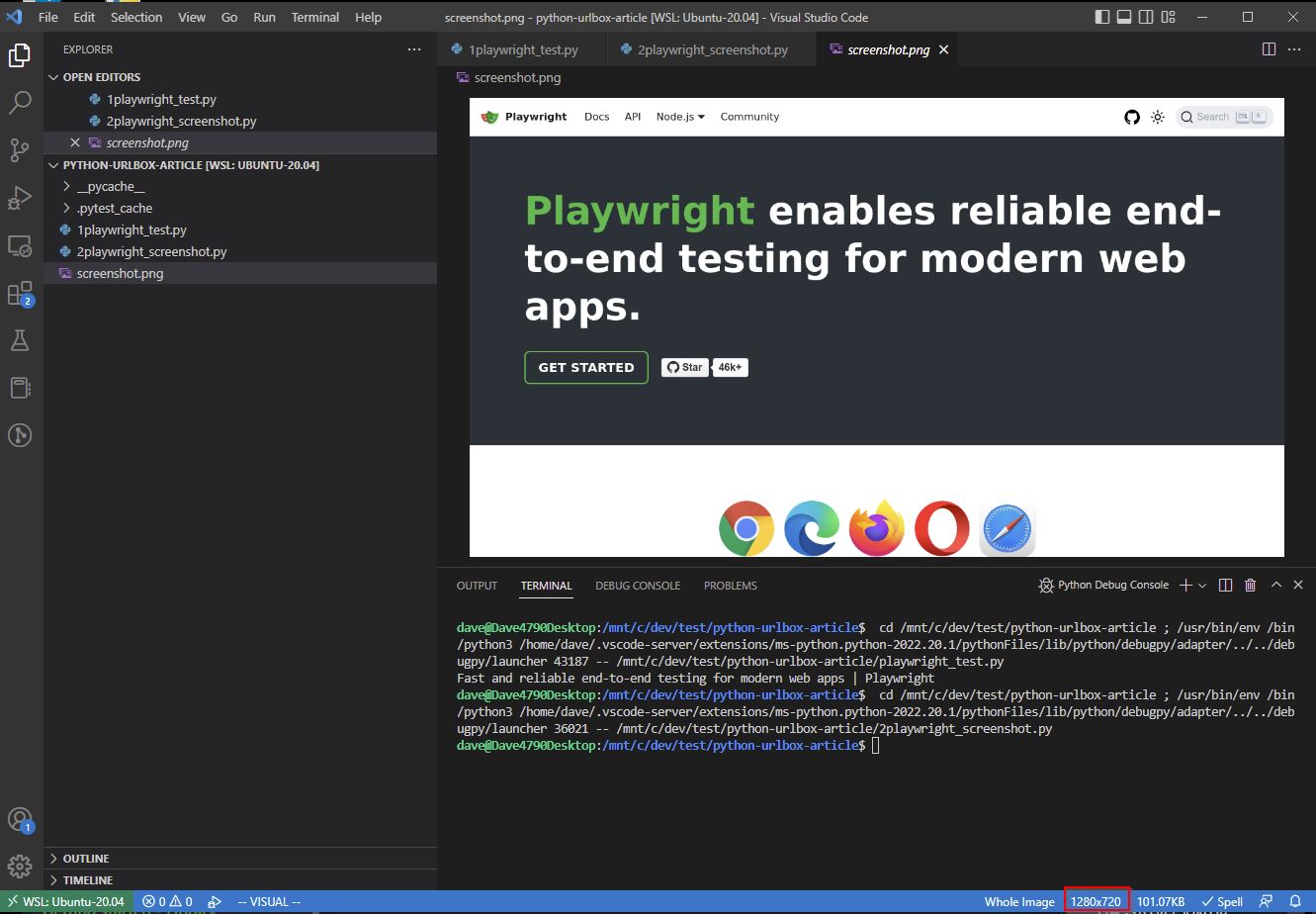

browser.close()and the output:

It worked!

Notice the default screenshot size is 1280 wide x 720 height playwright.dev is a much longer page

Fullpage

# this time gives 1280x3364

page.screenshot(path="screenshot.png", full_page=True)Sometimes this doesn't work as intended.

For the rest of this section I'm showing the most relevant parts of Playwright which have helped me:

from playwright.sync_api import sync_playwright

with sync_playwright() as p:

browser = p.chromium.launch()

ua = "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.87 Safari/537.36"

context = browser.new_context(

# Passing a different user agent

user_agent=ua,

# Forcing a larger viewport to make facebook play well

# https://playwright.dev/docs/next/emulation#viewport

viewport={"width":1200, "height":2000}

)

page = context.new_page()

# A public facebook post

url="https://www.facebook.com/djhmateer/posts/pfbid0WK2FACHyfyBi1Lg9intnH3SmLHNRYDTfzmGZgjFqSoQAnitAz8ZVdRF1nqmx9JX1l"

page.goto(url)

page.screenshot(path="screenshot.png")

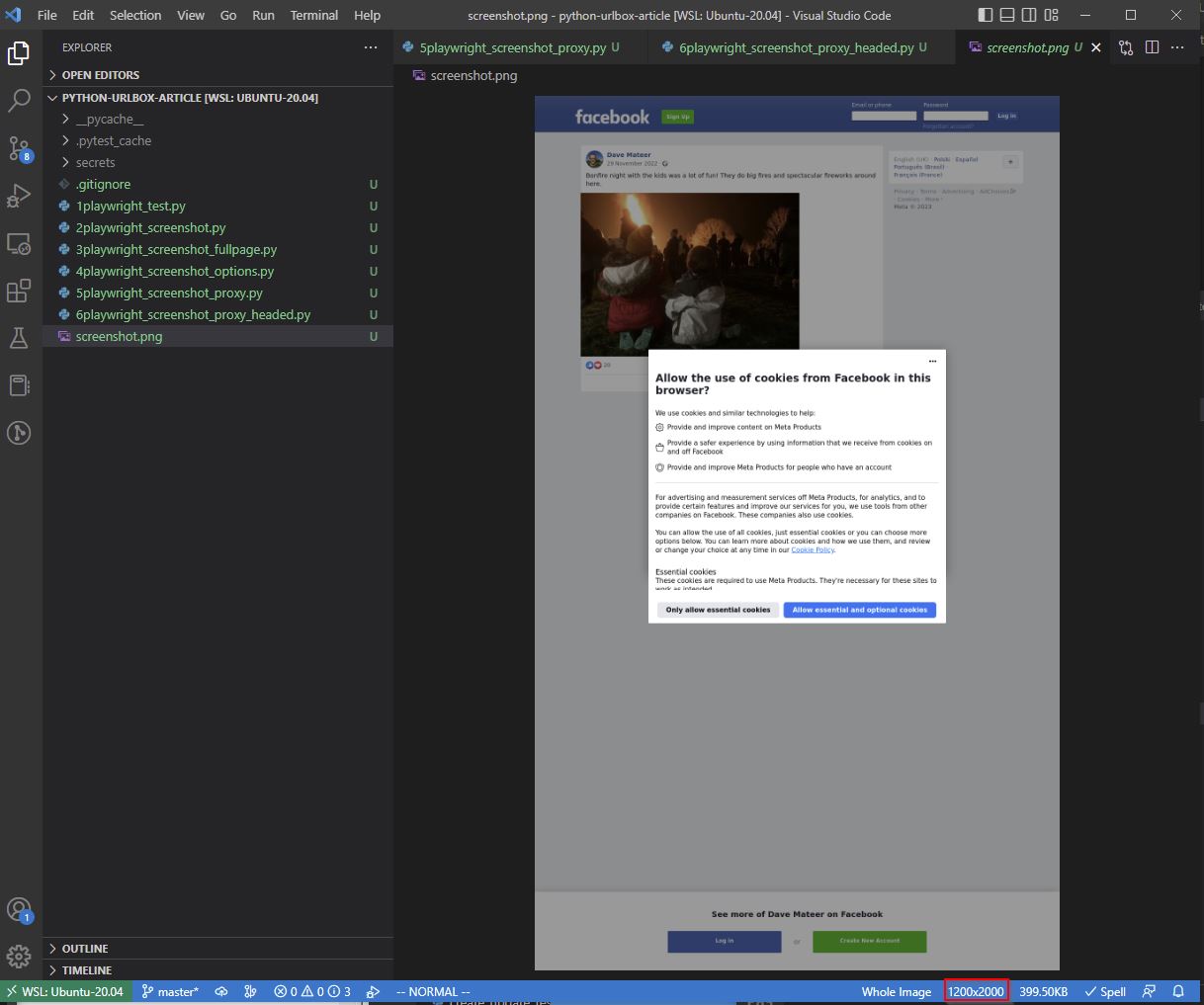

browser.close()The result is as expected.

A 1200x2000 page showing everying we want.

Proxy

We use https://brightdata.com/ for proxying when we want to appear to come from different IP addresses on each request.

from playwright.sync_api import sync_playwright

from pathlib import Path

with sync_playwright() as p:

# browser = p.chromium.launch()

# read secrets from a directory which isn't checked into git

# you will need to create this directory and files

username = Path('secrets/proxy-username.txt').read_text()

password = Path('secrets/proxy-password.txt').read_text()

browser = p.chromium.launch(

# Use a proxy to appear as if we're coming from a different IP address each time

proxy={

"server": 'http://zproxy.lum-superproxy.io:22225',

"username": username,

"password": password

}

)

ua = "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.87 Safari/537.36"

context = browser.new_context(

user_agent=ua,

viewport={"width":1200, "height":2000}

)

page = context.new_page()

# shows user agent and IP address

url = "http://whatsmyuseragent.org/"

page.goto(url)

page.screenshot(path="screenshot.png")

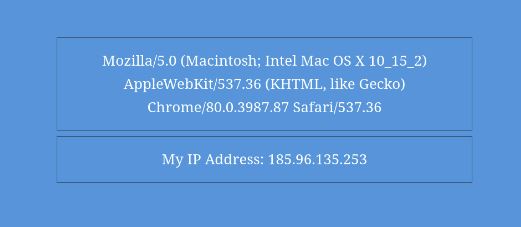

browser.close()Resulting screenshot is:

This IP address is in Israel, and I'm in the UK so the proxy has worked.

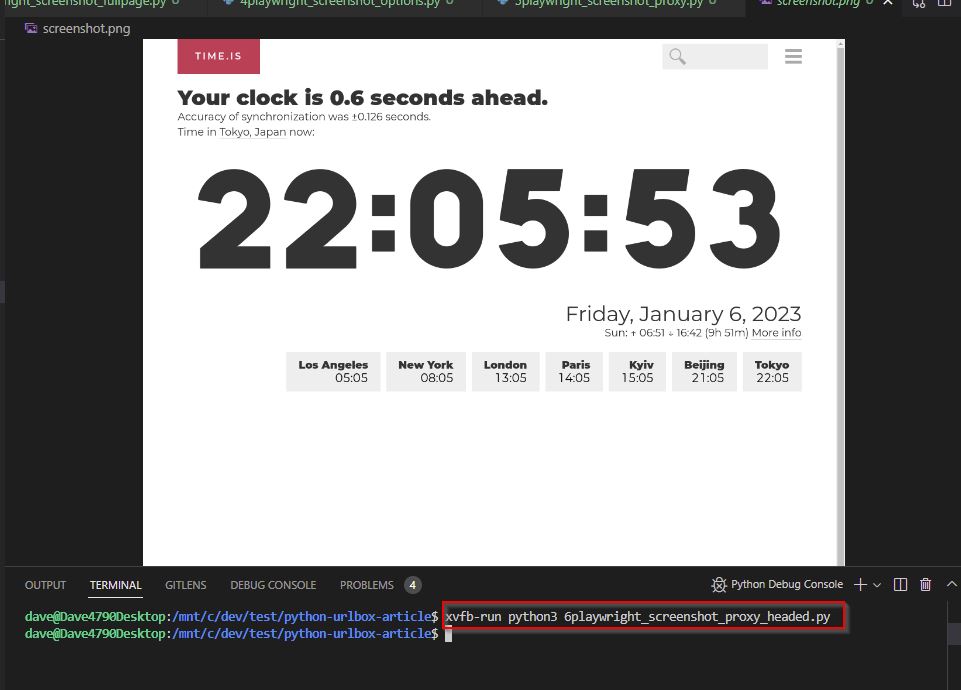

Headed mode

Sometimes websites (Facebook!) don't like headless browsers connecting to them, so lets do a Headed (or Headful) connection.

We'll need to install an X Virtual Frame Buffer as there is no screen for Chrome to render to (especially on the server when we go to production)

# install the virtual frame buffer

sudo apt install xvfb

# run screenshotter in headed mode

xvfb-run python3 6playwright_screenshot_proxy_headed.py

then

# 6playwright_screenshot_proxy_headed.py

from playwright.sync_api import sync_playwright

from pathlib import Path

with sync_playwright() as p:

username = Path('secrets/proxy-username.txt').read_text()

password = Path('secrets/proxy-password.txt').read_text()

browser = p.chromium.launch(

# Playwright runs in headless mode by default

# some sites eg Facebook, may not like this

# https://playwright.dev/docs/next/debug#headed-mode

# we need to run using a virtual xserver

headless=False,

# Lets use a proxy to appear as if we're coming from a different IP address

# each time

proxy={

"server": 'http://zproxy.lum-superproxy.io:22225',

"username": username,

"password": password

},

# Start the headed browser window Maximised so that we can get a 1200x2000 viewport

args=['--start-maximized']

)

ua = "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.87 Safari/537.36"

context = browser.new_context(

user_agent=ua,

viewport={"width":1200, "height":2000}

)

page = context.new_page()

# a public facebook post

# url = "https://www.facebook.com/djhmateer/posts/pfbid0WK2FACHyfyBi1Lg9intnH3SmLHNRYDTfzmGZgjFqSoQAnitAz8ZVdRF1nqmx9JX1l"

# shows user agent and IP address

url = "http://whatsmyuseragent.org/"

page.goto(url)

page.screenshot(path="screenshot.png")

browser.close()

result:

The Headed mode worked.

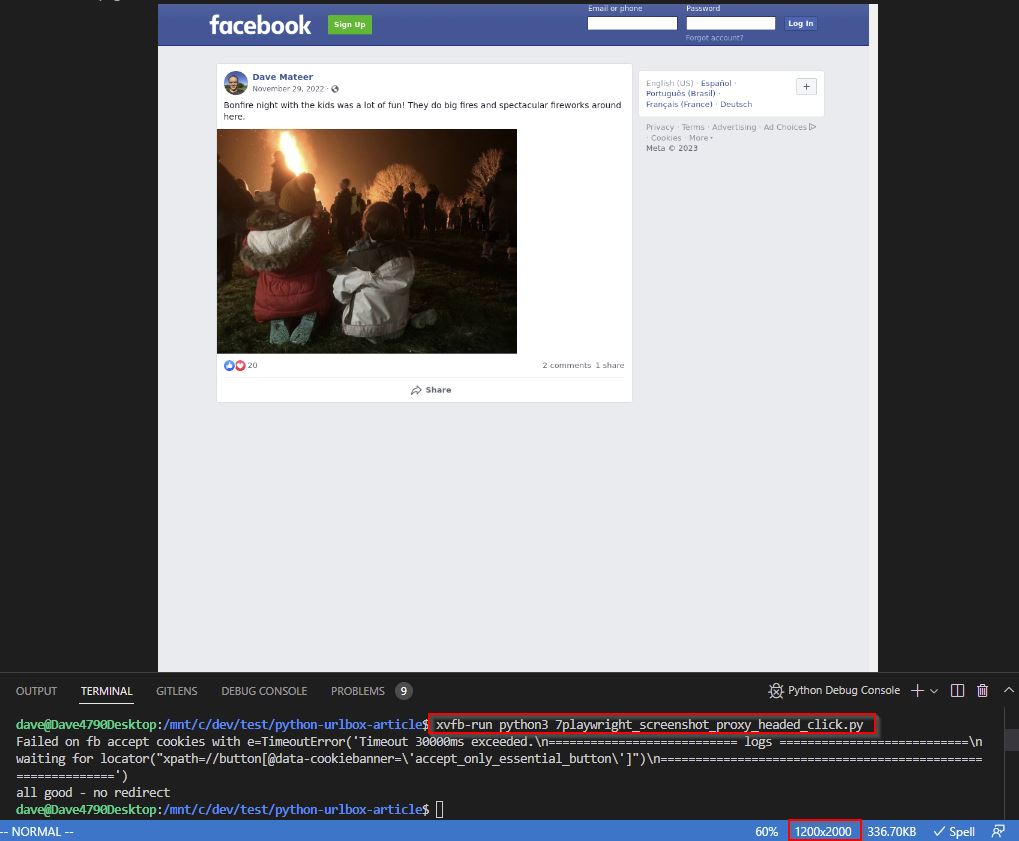

Clicking

Having a popup banner to accept cookies may not be ideal when doing a screenshot, so lets accept then do the screenshot:

from playwright.sync_api import sync_playwright

from pathlib import Path

import time

# run using this command

# xvfb-run python3 7playwright_screenshot_proxy_headed_click.py

with sync_playwright() as p:

username = Path('secrets/proxy-username.txt').read_text()

password = Path('secrets/proxy-password.txt').read_text()

browser = p.chromium.launch(

headless=False,

proxy={

"server": 'http://zproxy.lum-superproxy.io:22225',

"username": username,

"password": password

},

args=['--start-maximized']

)

ua = "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.87 Safari/537.36"

context = browser.new_context(

user_agent=ua,

viewport={"width":1200, "height":2000}

)

page = context.new_page()

# maybe there will be a cookie popup

# so click accept cookies on facebook.com to try to alleviate

try:

response = page.goto("http://www.facebook.com", wait_until='networkidle')

time.sleep(5)

foo = page.locator("//button[@data-cookiebanner='accept_only_essential_button']")

foo.click()

print(f'click done - fb click worked')

# linux server needs a sleep otherwise facebook cookie won't have worked and we'll get a popup on next page

time.sleep(5)

except Exception as e:

print(f'Failed on fb accept cookies with {e=}')

# a public facebook post

url = "https://www.facebook.com/djhmateer/posts/pfbid0WK2FACHyfyBi1Lg9intnH3SmLHNRYDTfzmGZgjFqSoQAnitAz8ZVdRF1nqmx9JX1l"

# https://github.com/microsoft/playwright/issues/12182

# sometimes a timeout

# wait until all network traffic is done (or enough is done for a good screenshot)

response = page.goto(url, timeout=60000, wait_until='networkidle')

# detect if there is a 30x redirect

# which means that the screenshot would show a login page instead of the intended page

if response.request.redirected_from is None:

print("all good - no redirect")

page.screenshot(path="screenshot.png")

browser.close()

else:

print(f'normal control flow. redirect to login problem! This happens on /permalink and /user/photo direct call {response.request.redirected_from.url}')

browser.close()

The accept cookies click worked, we've got a nice big page of 1200x2000, and we've screenshotted the public Facebook page.

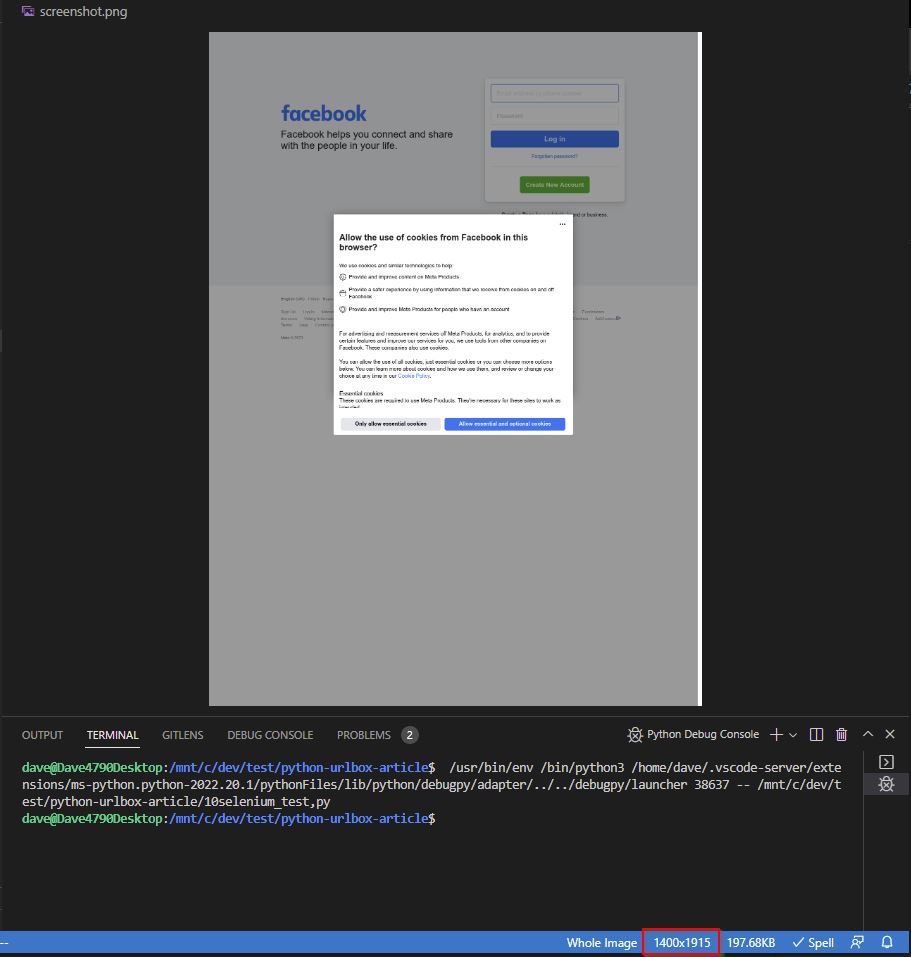

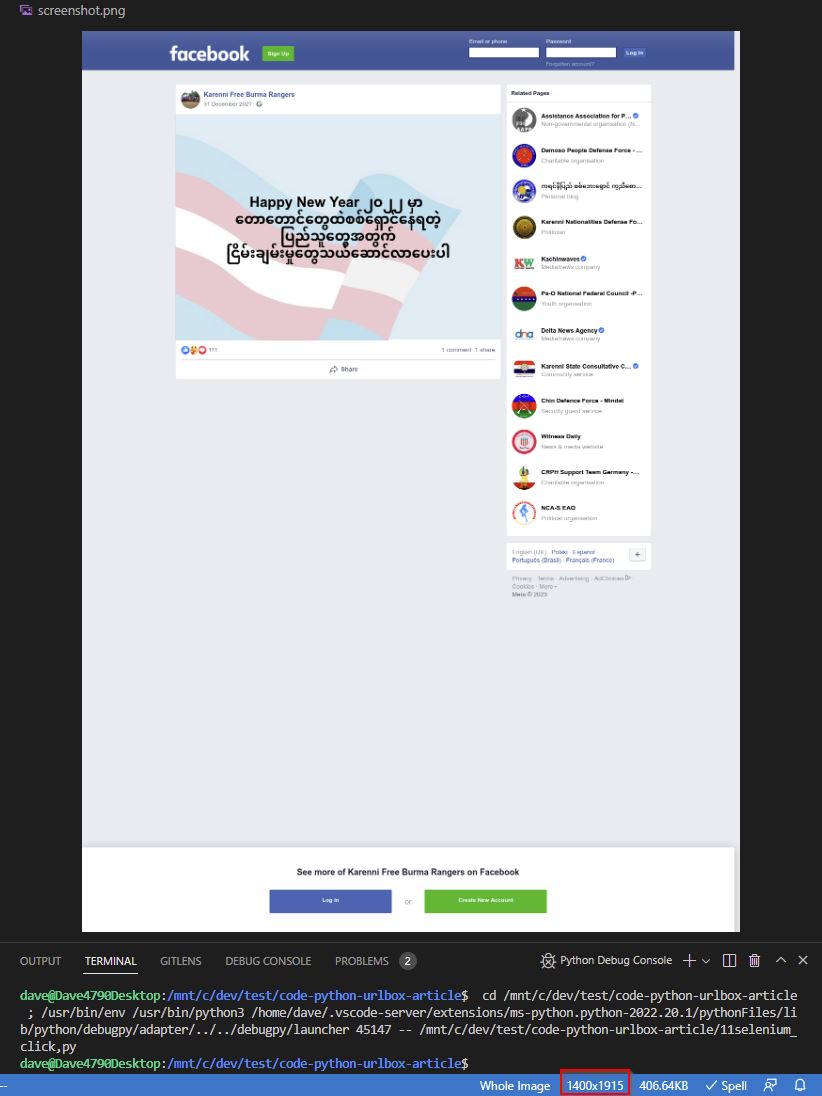

2. Selenium Webdriver (Firefox)

All major legacy browsers are supported (Firefox, Chrome, Internet Explorer).

I'm including this as I support code which uses this.

Mozilla Geckodriver - 0.32.0 - 2022-10-13

# Python Language Bindings - selenium-4.7.2-py3 - 2nd Dec 2022

pip3 install selenium

# Install browser

sudo apt install firefox -y

# Selenium requires a driver to interface with Firefox

# Gecko driver

# check version numbers for new ones

cd ~

wget https://github.com/mozilla/geckodriver/releases/download/v0.31.0/geckodriver-v0.31.0-linux64.tar.gz

tar -xvzf geckodriver*

chmod +x geckodriver

sudo mv geckodriver /usr/local/bin/

# unicode font support eg Burmese characters

sudo apt install fonts-noto -y

Unicode fonts on Stackoverflow

then

from selenium import webdriver

options = webdriver.FirefoxOptions()

options.headless = True

driver = webdriver.Firefox(options=options)

driver.set_window_size(1400, 2000)

# Navigate to Facebook

driver.get("http://www.facebook.com")

# save a screenshot

driver.save_screenshot("screenshot.png")

So this works in a similar way to Playwright

Clicking

from selenium import webdriver

import time

from selenium.webdriver.common.by import By

options = webdriver.FirefoxOptions()

options.headless = True

driver = webdriver.Firefox(options=options)

driver.set_window_size(1400, 2000)

# Navigate to Facebook

driver.get("http://www.facebook.com")

# click the button: Allow Essential and Optioanl Cookies

foo = driver.find_element(By.XPATH,"//button[@data-cookiebanner='accept_only_essential_button']")

foo.click()

# now am logged in, go to original page

driver.get("https://www.facebook.com/watch/?v=343188674422293")

time.sleep(6)

# save a screenshot

driver.save_screenshot("screenshot.png")

Correct screen size, correct rendering of UTF-8 fonts. However I've done a simple sleep for 6 seconds instead of waiting for all network requests to end. Playwright provides greater control.

We have found that doing many renders can cause memory leaks, so it is a good idea to destroy and recreate on each render.

3. Urlbox

Screenshot as a Service API

urlbox makes it much easier to render website screenshots without having to worry about low level infrastructure <notetoself> which I do love, however sometimes you need to get things done, make money, and hit deadlines.. not mess around </notetoself>

Signup to urlbox is free and fast.

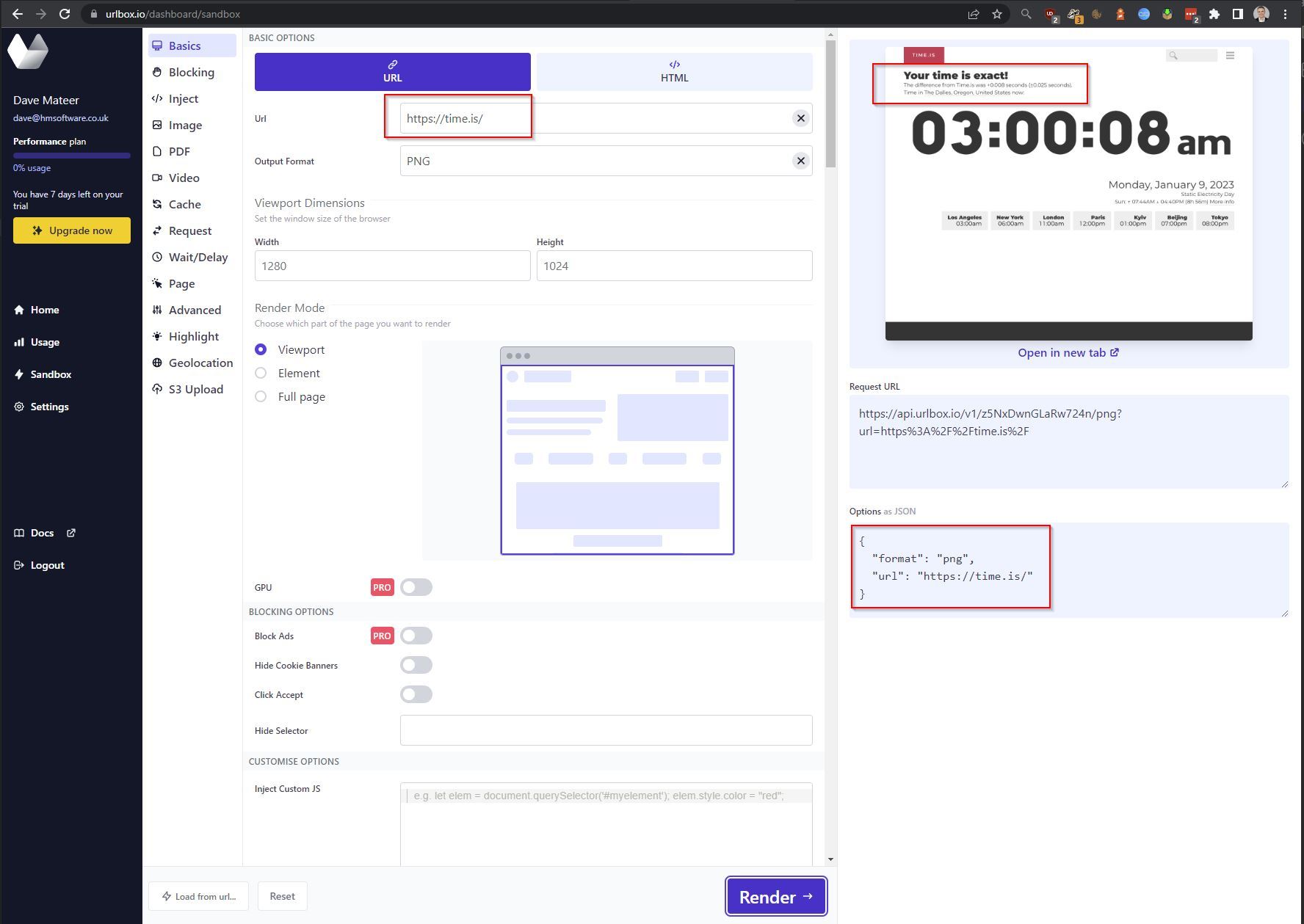

The sandbox is a perfect place to explore what urlbox can do. https://time.is/ is a good test as it is a long page (which is chopped above), shows the location of the caller - in this case Oregon. This is where urlbox initiated this request.

API

Urlbox docs is a good starting place.

There is a handy PyPi Urlbox package and GitHub source. I've got an Open Issue about a conflict as it uses requests 2:26.0. I worked around it, but if you have any issues, leave a message on the issue and I'll try to help.

# 1.0.6 - Dec 2021

pip install urlbox

then

from urlbox import UrlboxClient

from pathlib import Path

# create a secrets directory and text files with your details in

api_key = Path('secrets/urlbox-api-key.txt').read_text()

api_secret = Path('secrets/urlbox-api-secret.txt').read_text()

urlbox_client = UrlboxClient(api_key=api_key, api_secret=api_secret)

# Make a request to the Urlbox API

url = "https://time.is/"

# notice options in screenshot above

# easy to copy and paste from sandbox to code!

options = {

"format": "png",

"url": url,

}

response = urlbox_client.get(options)

# save screenshot image to screenshot.png:

with open("screenshot.png", "wb") as f:

f.write(response.content)https://urlbox.com/docs/examplecode/python - shows a lower level way of calling API via http.

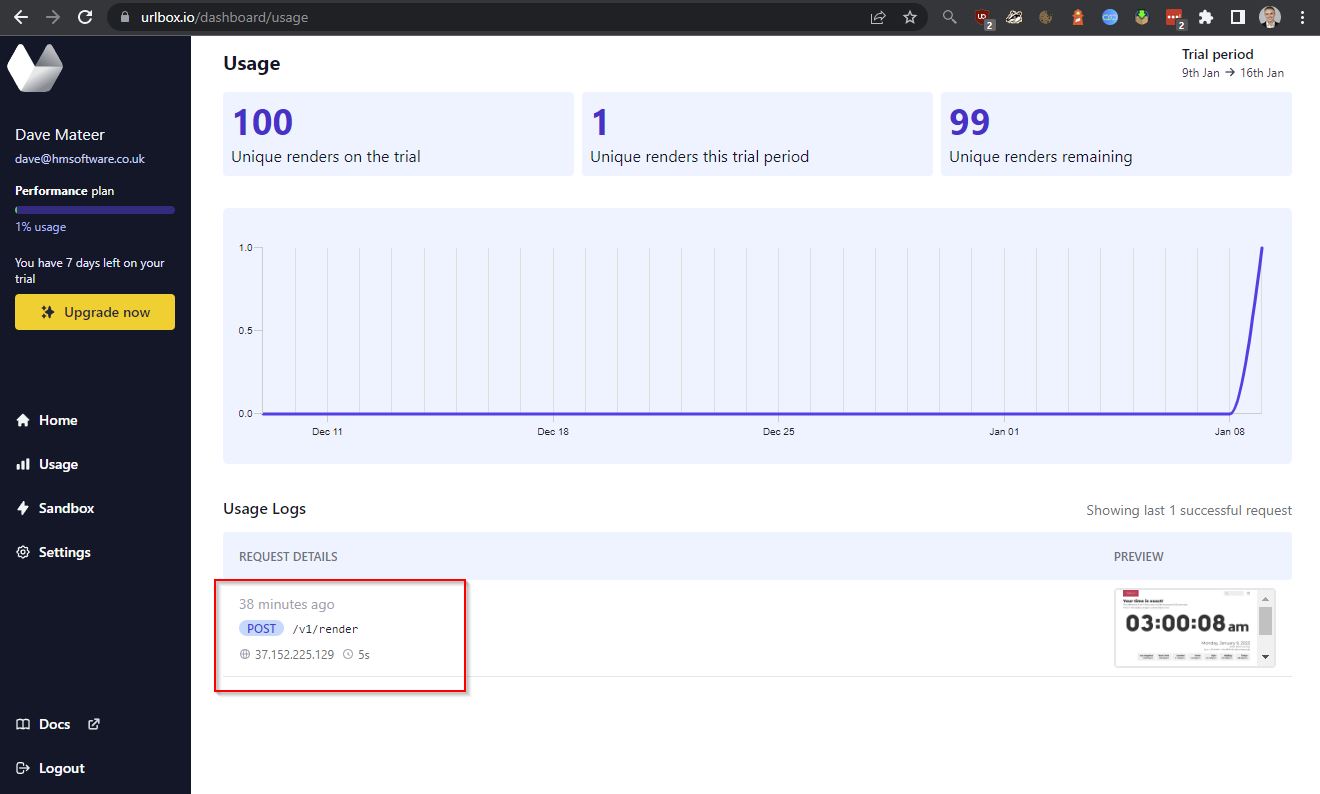

Options

Usage shows what you've done via the sandbox or API. Interestingly I've done multiple calls to the API while testing but it only shows 1. Caching is turned on by default on the API.

from urlbox import UrlboxClient

import sys

from pathlib import Path

api_key = Path('secrets/urlbox-api-key.txt').read_text()

api_secret = Path('secrets/urlbox-api-secret.txt').read_text()

urlbox_client = UrlboxClient(api_key=api_key, api_secret=api_secret)

url = "https://time.is/"

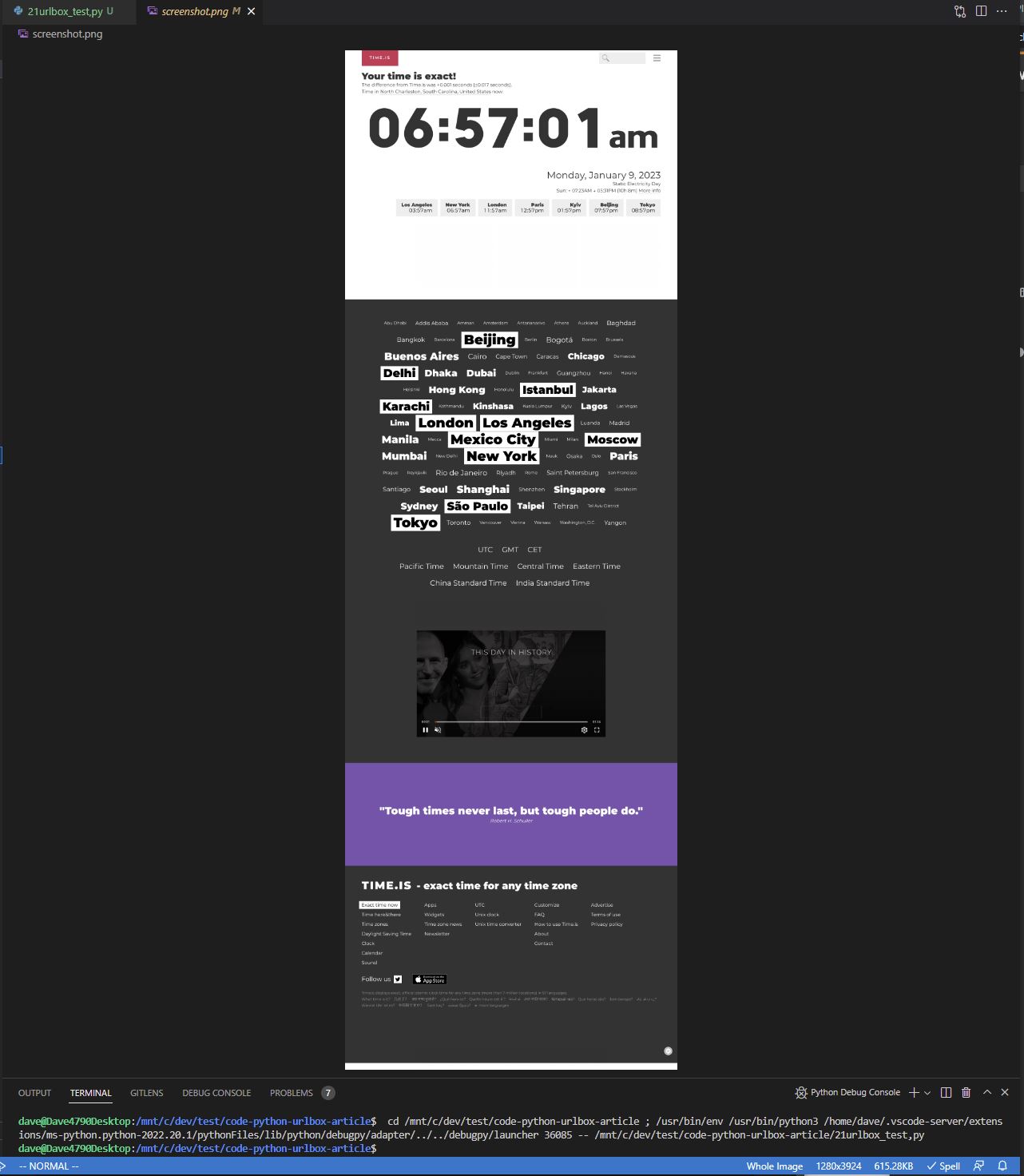

options = {

"format": "png",

"full_page": True,

"force": True, # no cache

"url": url

}

response = urlbox_client.get(options)

data = response.content

try:

# if we can decode the response.content then it is an error

# as the screenshot we are expecting is binary

json_error = str(data, "utf-8")

print(json_error)

sys.exit()

except (UnicodeDecodeError, AttributeError):

# can't decode the response.content so it is the screenshot as binary data

pass

# save screenshot image to screenshot.png:

with open("screenshot.png", "wb") as f:

f.write(response.content)

API worked giving me back a full screen image, not cached.

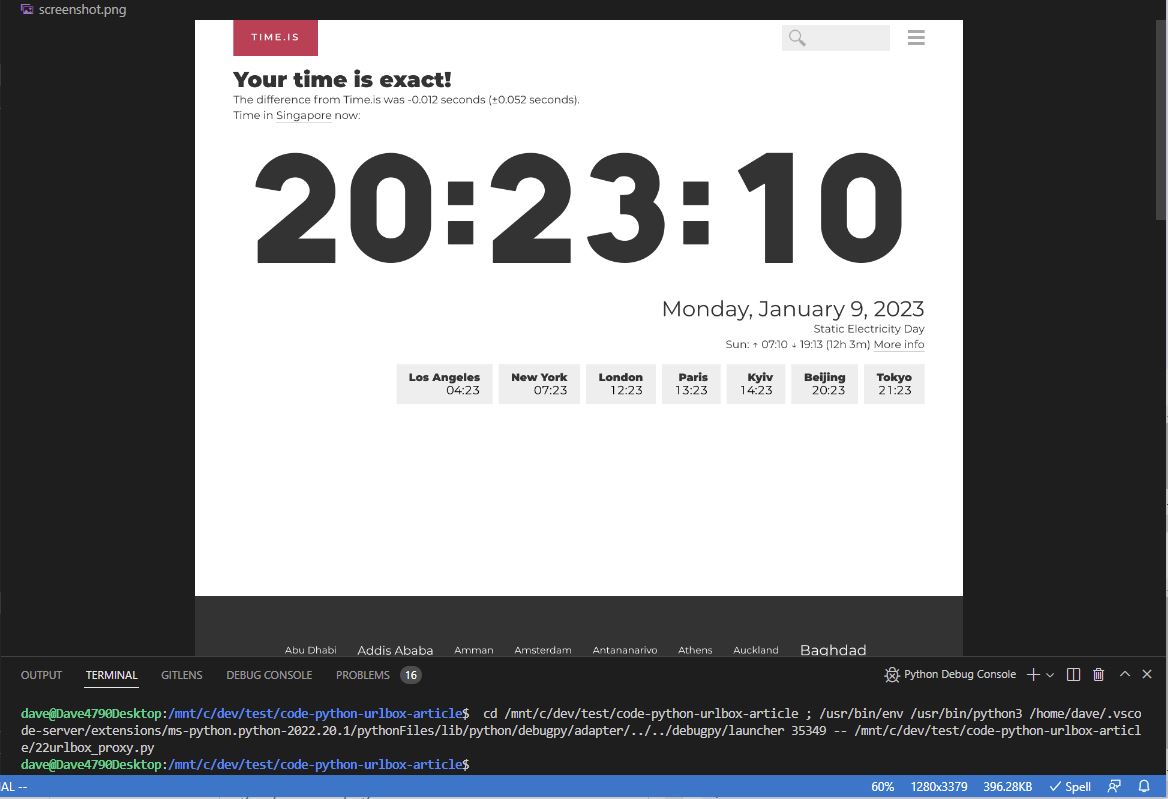

Proxy

Lets use https://brightdata.com/ as a proxy

Urlbox proxy docs shows the format we need to pass:

[user]:[password]@[address]:[port]

from urlbox import UrlboxClient

import sys

from pathlib import Path

api_key = Path('secrets/urlbox-api-key.txt').read_text()

api_secret = Path('secrets/urlbox-api-secret.txt').read_text()

urlbox_client = UrlboxClient(api_key=api_key, api_secret=api_secret)

proxy_username = Path('secrets/proxy-username.txt').read_text()

proxy_password = Path('secrets/proxy-password.txt').read_text()

url = "https://time.is/"

proxy = f"{proxy_username}:{proxy_password}@zproxy.lum-superproxy.io:22225"

options = {

"format": "png",

"url": url,

"force": True, # no cache

"full_page": True,

"proxy": proxy

}

response = urlbox_client.get(options)

data = response.content

try:

# if we can decode the response.content then it is an error

# as the screenshot we are expecting is binary

json_error = str(data, "utf-8")

print(json_error)

sys.exit()

except (UnicodeDecodeError, AttributeError):

# can't decode the response.content so it is the screenshot as binary data

pass

# save screenshot image to screenshot.png:

with open("screenshot.png", "wb") as f:

f.write(response.content)result:

The proxying worked as we're now coming from Singapore.

Pushing the limits

Urlbox has a plethora of advanced features (scroll explore in the sandbox!) including

{

"format": "png",

"url": "https://www.facebook.com/photo/?fbid=1329142910787472&set=a.132433247125117",

"force": true,

"fail_on_4xx": true,

"fail_on_5xx": true

}Fail on 4xx and 5xx is very useful. My use case got a bit trickier with detecting a redirect (showing in playwright above), so ultimately I had to resort to that.

Conclusion

I've been screenshotting websites professionally for 5 years. My last foray into Facebook with Playwright took 2 months to get right.

In this article we looked at

- Playwright - the newest of the screenshotting libraries and would recommend for new projects

- Selenium Webdriver - I support this but wouldn't recommend unless legacy

- Urlbox - a screenshot as a service API.

I would recommend exploring Urlbox first to avoid technical headaches. Revert to Playwright if you have to.